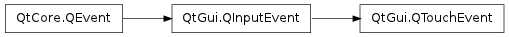

QTouchEvent¶

Note

This class was introduced in Qt 4.6

Synopsis¶

Functions¶

- def deviceType ()

- def setDeviceType (adeviceType)

- def setTouchPoints (atouchPoints)

- def setWidget (awidget)

- def touchPoints ()

- def widget ()

Detailed Description¶

The PySide.QtGui.QTouchEvent class contains parameters that describe a touch event.

Enabling Touch Events¶

Touch events occur when pressing, releasing, or moving one or more touch points on a touch device (such as a touch-screen or track-pad). To receive touch events, widgets have to have the Qt.WA_AcceptTouchEvents attribute set and graphics items need to have the acceptTouchEvents attribute set to true.

When using PySide.QtGui.QAbstractScrollArea based widgets, you should enable the Qt.WA_AcceptTouchEvents attribute on the scroll area’s PySide.QtGui.QAbstractScrollArea.viewport() .

Similarly to PySide.QtGui.QMouseEvent , Qt automatically grabs each touch point on the first press inside a widget, and the widget will receive all updates for the touch point until it is released. Note that it is possible for a widget to receive events for numerous touch points, and that multiple widgets may be receiving touch events at the same time.

Event Handling¶

All touch events are of type QEvent.TouchBegin , QEvent.TouchUpdate , or QEvent.TouchEnd . Reimplement QWidget.event() or QAbstractScrollArea.viewportEvent() for widgets and QGraphicsItem.sceneEvent() for items in a graphics view to receive touch events.

The QEvent.TouchUpdate and QEvent.TouchEnd events are sent to the widget or item that accepted the QEvent.TouchBegin event. If the QEvent.TouchBegin event is not accepted and not filtered by an event filter, then no further touch events are sent until the next QEvent.TouchBegin .

The PySide.QtGui.QTouchEvent.touchPoints() function returns a list of all touch points contained in the event. Information about each touch point can be retrieved using the QTouchEvent.TouchPoint class. The Qt.TouchPointState enum describes the different states that a touch point may have.

Event Delivery and Propagation¶

By default, QWidget.event() translates the first non-primary touch point in a PySide.QtGui.QTouchEvent into a PySide.QtGui.QMouseEvent . This makes it possible to enable touch events on existing widgets that do not normally handle PySide.QtGui.QTouchEvent . See below for information on some special considerations needed when doing this.

QEvent.TouchBegin is the first touch event sent to a widget. The QEvent.TouchBegin event contains a special accept flag that indicates whether the receiver wants the event. By default, the event is accepted. You should call PySide.QtCore.QEvent.ignore() if the touch event is not handled by your widget. The QEvent.TouchBegin event is propagated up the parent widget chain until a widget accepts it with PySide.QtCore.QEvent.accept() , or an event filter consumes it. For QGraphicsItems, the QEvent.TouchBegin event is propagated to items under the mouse (similar to mouse event propagation for QGraphicsItems).

Touch Point Grouping¶

As mentioned above, it is possible that several widgets can be receiving QTouchEvents at the same time. However, Qt makes sure to never send duplicate QEvent.TouchBegin events to the same widget, which could theoretically happen during propagation if, for example, the user touched 2 separate widgets in a PySide.QtGui.QGroupBox and both widgets ignored the QEvent.TouchBegin event.

To avoid this, Qt will group new touch points together using the following rules:

- When the first touch point is detected, the destination widget is determined firstly by the location on screen and secondly by the propagation rules.

- When additional touch points are detected, Qt first looks to see if there are any active touch points on any ancestor or descendent of the widget under the new touch point. If there are, the new touch point is grouped with the first, and the new touch point will be sent in a single PySide.QtGui.QTouchEvent to the widget that handled the first touch point. (The widget under the new touch point will not receive an event).

This makes it possible for sibling widgets to handle touch events independently while making sure that the sequence of QTouchEvents is always correct.

Mouse Events and the Primary Touch Point¶

PySide.QtGui.QTouchEvent delivery is independent from that of PySide.QtGui.QMouseEvent . On some windowing systems, mouse events are also sent for the primary touch point . This means it is possible for your widget to receive both PySide.QtGui.QTouchEvent and PySide.QtGui.QMouseEvent for the same user interaction point. You can use the QTouchEvent.TouchPoint.isPrimary() function to identify the primary touch point.

Note that on some systems, it is possible to receive touch events without a primary touch point. All this means is that there will be no mouse event generated for the touch points in the PySide.QtGui.QTouchEvent .

Caveats¶

- As mentioned above, enabling touch events means multiple widgets can be receiving touch events simultaneously. Combined with the default QWidget.event() handling for QTouchEvents, this gives you great flexibility in designing touch user interfaces. Be aware of the implications. For example, it is possible that the user is moving a PySide.QtGui.QSlider with one finger and pressing a PySide.QtGui.QPushButton with another. The signals emitted by these widgets will be interleaved.

- Recursion into the event loop using one of the exec() methods (e.g., QDialog.exec() or QMenu.exec() ) in a PySide.QtGui.QTouchEvent event handler is not supported. Since there are multiple event recipients, recursion may cause problems, including but not limited to lost events and unexpected infinite recursion.

- QTouchEvents are not affected by a mouse grab or an active pop-up widget . The behavior of QTouchEvents is undefined when opening a pop-up or grabbing the mouse while there are more than one active touch points.

See also

QTouchEvent.TouchPoint Qt.TouchPointState Qt.WA_AcceptTouchEvents QGraphicsItem.acceptTouchEvents()

- PySide.QtGui.QTouchEvent.DeviceType¶

This enum represents the type of device that generated a PySide.QtGui.QTouchEvent .

Constant Description QTouchEvent.TouchScreen In this type of device, the touch surface and display are integrated. This means the surface and display typically have the same size, such that there is a direct relationship between the touch points’ physical positions and the coordinate reported by QTouchEvent.TouchPoint . As a result, Qt allows the user to interact directly with multiple QWidgets and QGraphicsItems at the same time. QTouchEvent.TouchPad In this type of device, the touch surface is separate from the display. There is not a direct relationship between the physical touch location and the on-screen coordinates. Instead, they are calculated relative to the current mouse position, and the user must use the touch-pad to move this reference point. Unlike touch-screens, Qt allows users to only interact with a single PySide.QtGui.QWidget or PySide.QtGui.QGraphicsItem at a time.

- PySide.QtGui.QTouchEvent.deviceType()¶

Return type: PySide.QtGui.QTouchEvent.DeviceType Returns the touch device Type, which is of type QTouchEvent.DeviceType .

- PySide.QtGui.QTouchEvent.setDeviceType(adeviceType)¶

Parameters: adeviceType – PySide.QtGui.QTouchEvent.DeviceType Sets the device type to deviceType , which is of type QTouchEvent.DeviceType .

- PySide.QtGui.QTouchEvent.setTouchPoints(atouchPoints)¶

Parameters: atouchPoints –

- PySide.QtGui.QTouchEvent.setWidget(awidget)¶

Parameters: awidget – PySide.QtGui.QWidget Sets the widget for this event.

See also

- PySide.QtGui.QTouchEvent.touchPoints()¶

Return type: Returns the list of touch points contained in the touch event.

- PySide.QtGui.QTouchEvent.widget()¶

Return type: PySide.QtGui.QWidget Returns the widget on which the event occurred.